In this article we are going to point out some objective strengths of web server log analysis compared to JavaScript based statistics, such is Google Analytics. Depending on your preferences and type of the website, you might find some or all of these arguments applicable or not. In any case, everyone should be at least aware of differences in order to make a right decision.

1. You don’t need to edit HTML code to include scripts

Depending on how your website is organized, this could be a major tasks, especially if it contains lot of static HTML pages. Adding script code to all of them will surely take time. If your website is based on some content management system with centralized design template, you’ll still need to be careful not to forget adding code to any additional custom pages outside this CMS.

2. Scripts take additional time to load

Regardless of what Google Analytics officials say, actual experiences prove otherwise. Scripts are scripts and they must take some time to load. If external file is located on a third-party server (as it’s the case with Google Analytics), the slowdown is even more noticeable, because visitor’s browser must resolve another domain.

As a solution they suggest putting inclusion code at the end of the page. Indeed, in that case it would appear that page is loaded more quickly, but the truth is that there’s a good chance that visitor will click another link before script is executed. As a result, you won’t see these hits in stats and they are lost forever.

3. If website exists, log files exist too

With JavaScript analytics, stats are available only for periods when code was included. If you forget to put code on some pages, the opportunity is forever lost. Similarly, if you decide to start collecting stats today, you’ll never be able to see stats from yesterday or before. Same applies to goals: metrics are available only after you decide to track them. With some log analyzers, you can freely add more goals anytime and still be able to analyze them based on log files from the past.

4. Server log files contain hits to all files, not just pages

By using solely JavaScript based analytics, you don’t have any information about hits to images, XML files, flash (SWF), programs (EXE), archives (ZIP, GZ), etc. Although you could consider these hits irrelevant, they are not for most webmasters. Even if you don’t usually maintain other types of files, you must have some images on your website, which could be linked from external websites without you knowing anything about it.

5. You can investigate and control bandwidth usage

Although you might not be aware of it, most hosting providers limit bandwidth usage and usually base their pricing on it. Bandwidth usage costs them and, naturally, it most probably costs you as well. You would be surprised how much domains (usually from third-world countries) poll your whole website on a regular basis, possibly wasting gigabytes of your bandwidth every day. If you could identify these domains, you could easily block their traffic.

6. Bots (spiders) are excluded from JavaScript based analytics

Similar as previous point, some (bogus) spiders misbehave and they are wasting your bandwidth, while you don’t have any benefit from them. In addition, server logs also contain information about visits from legitimate bots, such are Google or Yahoo. By using solely JavaScript based analytics you have no idea how often they come and which pages they visit.

7. Log files record all traffic, even if JavaScript is disabled

Certain percentage of users choose to turn off JavaScript, and some of them use browsers that don’t support it at all. These visits can’t be identified by JavaScript based analytics.

8. You can find out about hacker attacks

Hackers could attack your website with various methods, but neither of them would be recorded by JavaScript analytics. As every access to your web server is contained in log files, you are able to identify them and save yourself from damage (by blacklisting their domains or closing security holes on your website).

9. Log files contain error information

Without them, in general case, you don’t have any information about errors and status codes (such are Page not found, Internal server error, Forbidden, etc.). Without it, you are missing possible technical problems with your website that lower overall visitor’s perception of its quality. Moreover, any attempt to access forbidden areas of your website can be easily identified.

10. By using log file analyzer, you don’t give away your business data

And last but not least, your stats are not available to a third-party who can use them at their convenience. Google has bought all rights for, at that time, popular and quite expensive web statistics product (Urchin), repackaged it, and then allowed to anyone to use it for free. The question is: why? They surely get something in return, as Google Analytics license agreement allows them to use your information for their purposes, and even to share it with others if you choose to participate in sharing program.

What could they possibly use? Just to give few obvious ideas: tweaking AdWords minimum bids, deciding how to prioritize ads, improving their services (and profits) – all based on traffic data collected from you and others.

Related links

Busting the Google Analytics Mythbuster

Which web log analyzer should I use?

What price Google Analytics? (by Dave Collins)

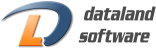

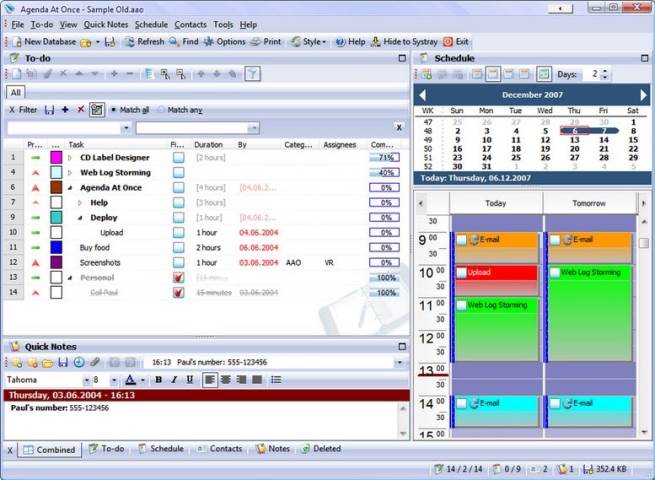

Web Log Storming – an interactive web log analyzer

Software Development

Software Development Web Design and Programming

Web Design and Programming Examples of our Work

Examples of our Work

Regarding giving your data to google, if you are already using their adsense programs, then they already have all the data about your site.

These arguments make alot of sense, but javascript based analytics do have the ability to measure some additional things that web log analyzers can’t by default:

screen resolution / color depth

visitor stay time before leaving the page

which link a user clicked on, not just which url – this can also be tracked with redirectors, but that annoys your users.

user events on the page such as navigation across ajax elements

whether a user started to fill in a form but got bored and didn’t submit it

client side errors: javascript, broken images, load time, etc.

Not to say that these trump the issues you raised, but they are worth considering.

Personally, I *prefer* numbers that are limited to real people running real browsers who stayed on my page at least the couple seconds it takes to load tracking scripts. Data on real, engaged users is more interesting to me (and my advertisers) than counting every GoogleBot hit to inflate my numbers.

If you’re using something like Google Analytics, most of your users probably already have the script cached anyway. It only has to be served once for any number of sites using its tracking.

Thank you all for your comments.

@Jay: Yes, if you have AdSense on your website point 10 doesn’t make sense.

@Greg: That’s right, JS has its own advantages and that’s why lot of people use both.

@Dave Ward: Of course, your mileage may vary, depending on preferences and website type (as we pointed out in the first paragraph).

There is some irony in this post.

Firebug error due to ABP blocking google analytics.

_gat is not defined

[Break on this error] var pageTracker = _gat._getTracker(“UA-1852659-1”);

That said, irony aside a very good post, especially the part about what javascript can only report on, not really touched on as much but google does decide on its own algorithms what data traffic to allow and deny. Most are bad but at the same time, did you have a choice in the matter?

Its a shame the web log analysers havent been more maintained and updated for the web2.0 so to speak.

@Chris

> Its a shame the web log analysers havent been more maintained and updated for the web2.0 so to speak.

Some developers are still working. 😉 If you have any ideas to share, feel free to use Support link at the top of this page.

@vradmilovic

Alas I was thinking more the open source type and not those limited to windows or which you have to pay for that said…

I will think on about what would make a solid web 2.0. Not like that means much as really who am I. But it sounds good.

Most of your points are okay, but logging can be prohibitive on sites with high request rates. Logging thousands of hits per second does not work without specialized logging infrastructure or high-bandwidth io channels and disk arrays, per server.

Your position doesn’t state whether if you’re approaching this from a diagnostic or a marketing-stance… in short, it feels as if you’re playing both positions to desperately expand a top-10 list.

Honestly, the correct solution would be a combination of both log analysis and JavaScript tagging.

Let’s break this down:

#1 — This is quite lazy. If you had foresight, it could also be auto generated within a view/template/rendering action.

#2 — It sounds that you’re really just not happy with Google’s performance– which can be resolved by running your own analysis service. Yes, it’s easier than you think.

#3 — Again, lazy. You’re arguing that its idiot-proof. Also, if you have the JavaScript-generated dataset, the administrator can run their own custom queries.

#4 — I’ll buy this. Though, with some webserver configuration, you can force requests be checked to ensure that the referral domain is legitimate.

#5 — This can be accomplished through JavaScript tagging as well.

#6 — I’ll buy this.

#7 — I’m sorry, but nothing is 100%. Log analysis’ biggest enemy is the web-cache. Only dynamic content is guaranteed to bust a caching strategy. You are potentially under-reporting the actual metrics by relying on only one source.

#8 — Maybe. This is a very passive/reactive approach, though. If security is that much of a concern, a specialist should be consulted.

#9 — I’ll buy this.

#10 — Again, this is resolved by running your own analysis service. Not all page-tagging solutions are Google operated.

I understand that you wrote a log analysis product, and would like to tout its benefits– but there’s no need to bend the truth to fit your agenda. Be straight with your readers.

Has anyone mentioned the cost of the web log analysing products?

@MikeHerrera: Thank you for your comment. It was not an intention to “step on toes” with this post, but some comments look like it did happened. First paragraph states that not all reasons are applicable to every situation, but some of readers seem to miss it.

> playing both positions to desperately expand a top-10 list.

I’m talking about both positions because I actually do “wear both hats”, as most other small company owners do. As of “desperation”, it doesn’t make much difference if there’s 10, 9, 5 or 15 reasons…

> Let’s break this down:

Please don’t assume that *we* have problem with changing code or building own tracking system. 🙂 For this website, we have built a template engine that centralizes such modifications. But you also shouldn’t assume that all webmasters do the same, that they have enough resource and/or knowledge to build own tracking system or to hire professionals to do certain tasks for them.

I agree: using both methods is the best approach (as I did noted in one of previous posts), but I think it’s better to use log analyzer alone than JS solution alone.

@CraigB: Money-cost is similar for both methods, starting with $0 (there are also decent free/open source log analyzers available, and there are paid JS solutions).

Interesting post! I can think to at least 20 reasons for not switching to log analyzers exclusively.

I’ll give you just 4 which have huge impact on the data you get:

1. Caching will always be a problem as your servers will not be able to report pages loaded from computer or server caches

2. No way to track returning visitors. You need cookies for that and code to read them 🙂

3. No way to track user interaction with elements on your website

4. No way to do ecommerce tracking and incredibly difficult to funnel processes and get real actionable data.

From a programmer point of view it might be useful to use log files instead of javascript type tracking, but from a business view that would be not that friendly. As for Google knowing too much about your website… well, he already knows with or without having Google Analytics in place. Maybe if you stop it from crawling your website you take care of that issue, but we all know nobody wants that. 🙂